Automated testing entails much more than simply creating tests and enabling them. A “set it and forget it” approach won’t get you very far with automated tests — particularly automated browser tests, which interact with the ever changing frontend of your application or website.

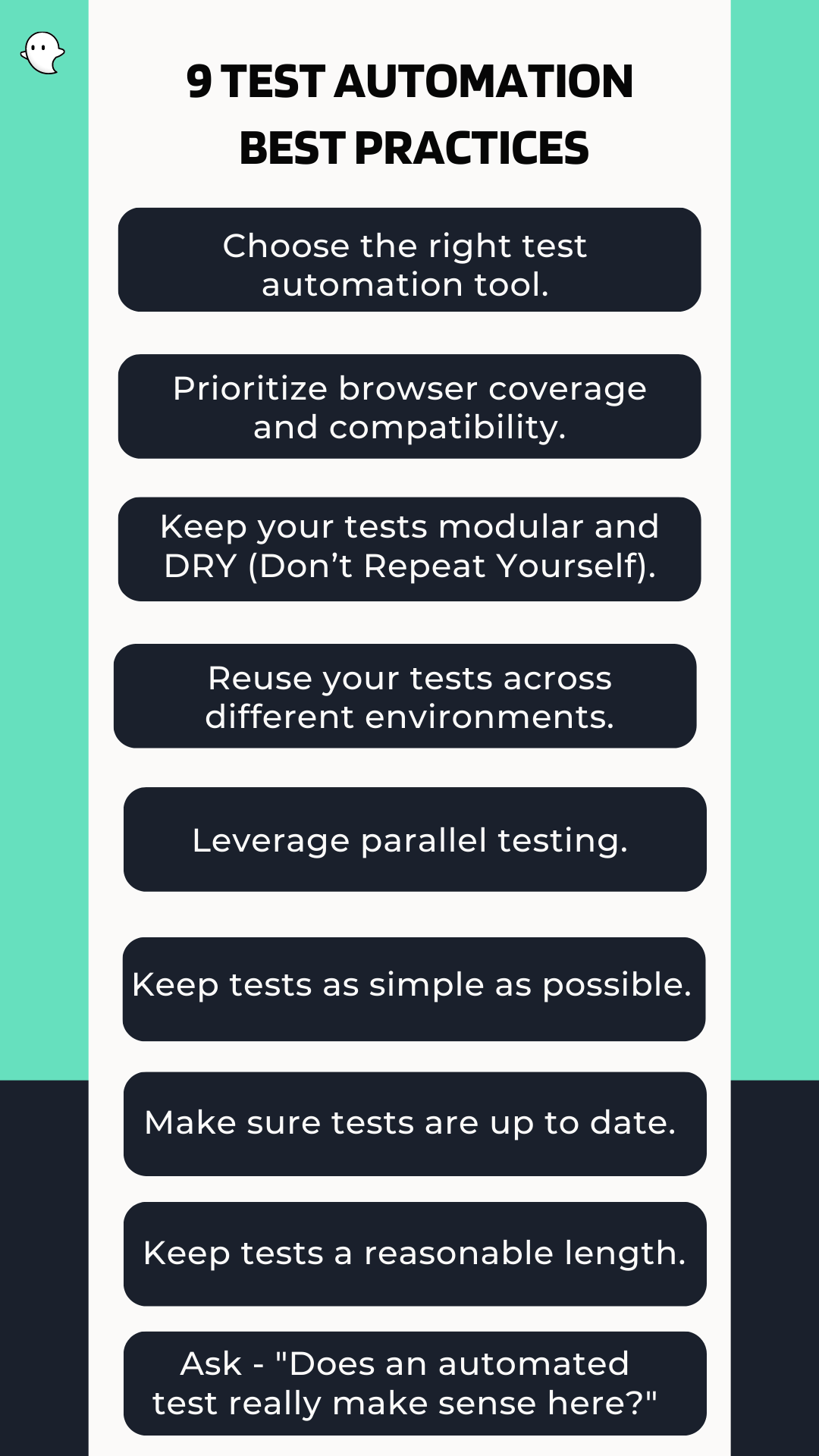

The true workload ultimately comes with the maintenance and evolution of your tests, so it’s extremely important to design your tests in a logical and maintainable way. Below are nine test automation best practices that we suggest when building Ghost Inspector tests, and automated browser tests in general.

Table of Contents

1. Choose the right test automation tool.

When it comes to test automation, choosing the right tool is crucial. There are so many options out there that it can be overwhelming to decide where to start. In order to make the best choice, you need to consider several key factors, including:

- What kind of testing you are doing – different tools excel at different types of testing, so it’s important to find one that fits your needs.

- The level of technical expertise on your team. Some tools are more user-friendly than others, so you’ll want to choose one that matches your team’s skill level.

- The scalability of the tool. Will it be able to handle the growing needs of your project?

Many of our customers choose Ghost Inspector for its affordable pricing and intuitive interface. There’s also no coding required, so it’s simple for non-developers, or really anyone on your team, to build automated browser tests and ensure your website or app is working properly. For more complex testing, we also have Smart logic and documentation for iframes, PDF rendering, file uploads, date pickers, credit card checkouts, 2FA logins, and more.

If you’d like to learn more about Ghost Inspector and how it can help your test automation processes, check out this video from Emilio below.

Set up test automation with Ghost Inspector

Our 14 day free trial gives you and your team full access. Create tests in minutes. No credit card required.

2. Prioritize browser coverage and compatibility.

One of the main advantages of automated browser testing is that you can test your application on multiple browsers and devices, which helps ensure that it’s is compatible and responsive across different user environments.

You can use analytics data to identify the most common browsers used by your target audience and test on those first. Additionally, utilizing a cloud-based testing platform can help you test on a vast range of browsers and devices, further increasing your coverage and compatibility.

3. Keep your tests modular and DRY (Don’t Repeat Yourself).

Creating automated test cases can be a time-consuming process, and you don’t want to reinvent the wheel each time you run a new test. That’s why designing your test cases for reusability is one of the most critical test automation best practices. You should aim to create modular test cases that can be reused across multiple test scripts. Avoid hard-coding unique elements such as user credentials and form data; instead, use variables, and store them in separate data files or databases. This will make it much easier to maintain and update your test scripts when changes occur to the application.

Bonus tip: use our test import feature.

This feature allows you to section off specific sequences into reusable “sub-tests” and include them within other tests. For example, you could have a “Login” test and reuse that anywhere you need to login. This cuts down on repetition tremendously and ensures that changes only need to be made in one place.

4. Reuse your tests across different environments.

If you wish to execute the same tests on different websites/environments, for instance on both staging and production, there’s no need to duplicate the tests just to change the start URL. Instead, use our API to execute the test or suite and override the start URL on the fly.

5. Leverage parallel testing.

There are a few reasons why we consider running tests in parallel one of the top test automation best practices. First, executing test scripts sequentially can be a time-consuming process, especially if you’re testing on multiple devices or browsers. Parallel testing is an effective way to speed up your test execution and reduce your overall testing time because we can run 30+ tests at once, instead of one at a time.

Which brings me to my next point about keeping your tests independent and focused on a single task…

6. Keep tests as simple as possible and shoot to test one specific feature with each test.

Avoid the temptation of testing a bunch of different things within one test. For example, I wouldn’t suggest creating a test that logs into your system, updates an account, adds an item to the shopping cart, and then checks out all in one test. That test is going to be brittle, since any issue along that workflow could break it — and when it breaks, you won’t specifically know where the issue is without a good deal of review. Try to split that workflow into individual tests to test each feature separately. This may require you to repeat specific operations a number of times, like logging in, but suggestion #4 above will make that very easy.

7. Keep your tests up to date.

As your application evolves over time, so should your test scripts. Keeping your test cases up to date ensures that you’re testing the latest features and functionality, and identifying potential problems before they hit the production environment. When updating your tests, focus on areas that have changed within the application, such as new features, updates to existing features, or changes to the UI. Regularly updating your tests also improves your test coverage and helps prevent issues that may arise from changes elsewhere in the application.

8. Keep tests to a reasonable length.

There’s currently no hard test length limit (other than 10 minutes of run time). However, in my experience, the shorter your test is, the more durable and effective it will be. If you get beyond 30 – 40 steps, your test may be starting to get too complicated — not because Ghost Inspector can’t execute it — but because it’s more likely that something will go wrong and it’ll be difficult to troubleshoot. If you have a test that’s 100 steps long and step #97 fails, trying to reproduce that scenario in your browser can be painstaking work. Testing specific features may require you to do a number of things to get the test into a certain situation, but try to stay conscious of how long your test is becoming.

9. Sometimes you have to ask yourself, “Does an automated test really make sense here?”

Every type of automated testing out there comes with a “maintenance fee”, from unit tests up through end-to-end tests. As the complexity of the test increases and the volatility of the feature you’re testing increases, so too does the required maintenance. The primary motivation for automated testing is to save you time. This happens when the automated “maintenance fee” is cheaper than the cost of manually testing a feature — but realistically, that’s not always going to be the case. There are sometimes going to be situations where it’s just not convenient to maintain a Ghost Inspector test, even if you can technically “make it work”. You will need to use your judgement as to whether an automated test makes sense, given the situation.

Automated browser testing can be complex, time-consuming, and prone to errors if not done right. However, adopting these test automation best practices can help you overcome these challenges and increase the efficiency and effectiveness of your testing efforts. In today’s fast-paced development environment, where the pressure to release applications quickly is higher than ever, implementing these best practices will solidify your position as a trusted QA tester or developer, help reduce application downtime and increase user satisfaction.